Eleven Multilingual v1: Latest Voice Generation Model

Our present deep learning methodology utilizes increased data, enhanced computational strength, and innovative methods to offer our most sophisticated voice generation model.

We're excited to unveil Eleven Multilingual v1 - our cutting-edge speech synthesis model that now supports seven additional languages: French, German, Hindi, Italian, Polish, Portuguese, and Spanish. Rooted in the research behind Eleven Monolingual v1, our modern deep learning techniques utilize an abundance of data, heightened computational capacity, and fresh methodologies within an even more refined model. This model excels in discerning textual subtleties and offering a deeply resonant vocal output. This leap forward broadens the scope for content creators, game developers, and publishers, setting the stage for the use of generative media in crafting more localized, accessible, and innovative content.

The updated model is accessible across all subscription tiers, and you can test it immediately on our Beta platform.

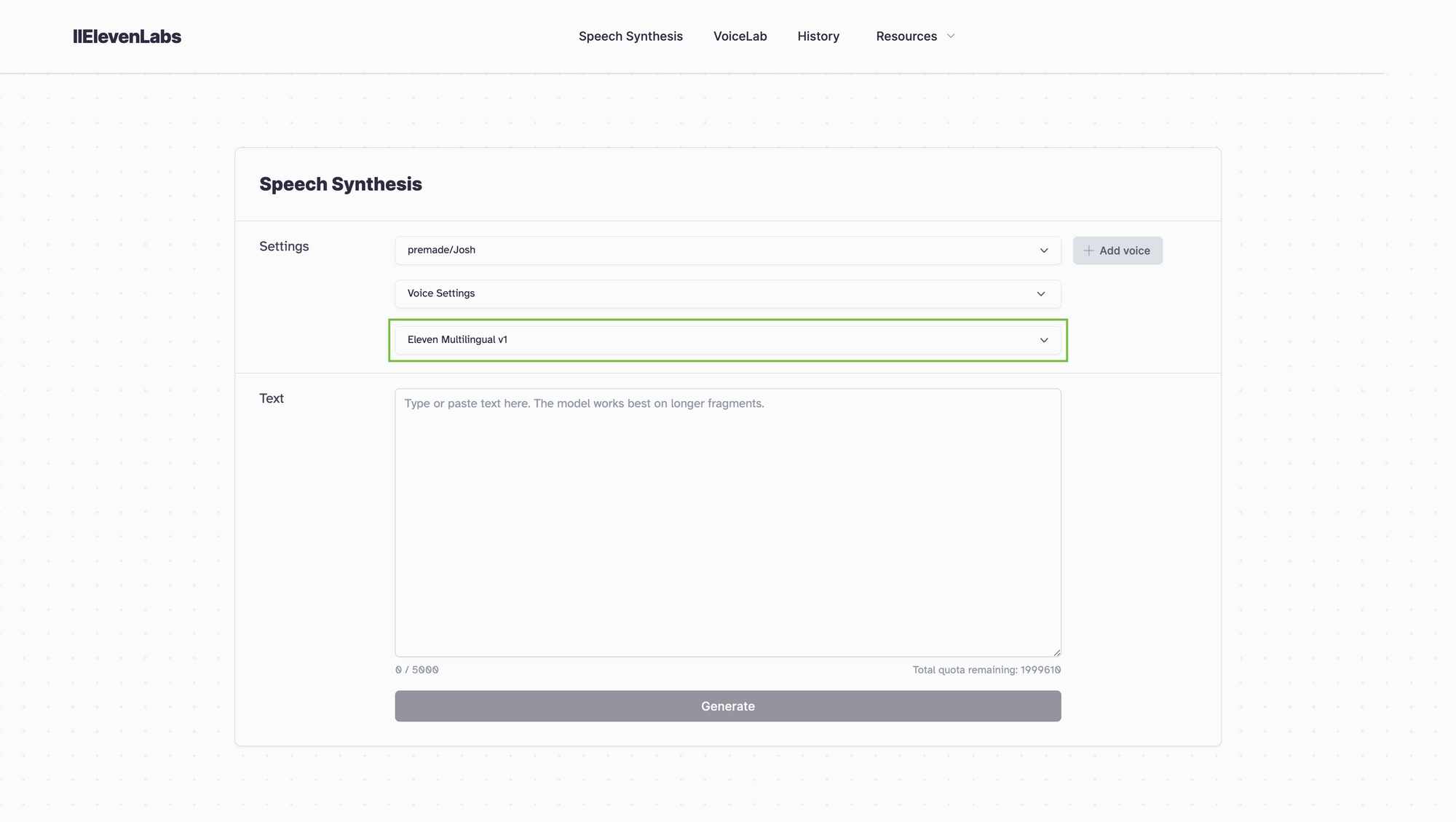

To utilize it, just choose it from the recently-introduced drop-down list in the Speech Synthesis section.

Research Overview

Like its forerunner, the updated model originates exclusively from our internal studies. It upholds all the elements that established Eleven Monolingual v1 as a superior narrative device, with its knack for tailoring its delivery based on the context and for vividly expressing emotions and intentions. These attributes have been broadened to include the newly integrated languages through our multilingual training approach.

A standout aspect of this model is its ability to discern multilingual content and deliver it with precision. Users can now produce speech in various languages from a singular prompt, all the while preserving the distinct voice nuances of each speaker. However, for optimal output, using a single-language prompt is advised. While the model adeptly handles multiple languages simultaneously, there's room for refinement.

This new version seamlessly integrates with other features of VoiceLab, such as Instant Voice Cloning and Voice Design. Any voices created are anticipated to retain the majority of their innate speech attributes across languages, inclusive of their native accents.

However, it's important to acknowledge the model's known constraints: numbers, acronyms, and certain non-native terms may revert to their English pronunciation even when introduced in another language context. For example, the number "11" or the term “radio” in a Spanish context might be vocalized in their English manner. We suggest articulating numbers and acronyms in the desired language while we strive for further enhancements.

Democratization of voice

ElevenLabs was founded on the aspiration to make content universally accessible across languages and voices. Our team is a diverse blend of talents from Europe, Asia, and the US. As our workforce and the globe grow more linguistically diverse, our commitment to rendering human-equivalent AI voices in every tongue only strengthens.

Our most recent Text-to-Speech (TTS) model marks the commencement of our journey towards this grand vision. With human-like AI voices emerging, individuals and enterprises can now tailor audio content to their specific requirements, values, and tastes. This innovation levels the arena for independent artists, startups, and content creators, allowing them to craft auditory experiences that stand toe-to-toe with those produced by resource-rich giants.

These advantages now spill over into multilingual, multicultural, and educational domains. This allows users, businesses, and educational entities to generate genuine audio that strikes a chord with a wider listener base. By offering a vast spectrum of voices, dialects, and languages, AI is closing cultural divides and fostering global cohesion. At Eleven, we're convinced that this increased accessibility seeds deeper creativity, ingenuity, and inclusivity.

Content creators aiming to resonate with varied audiences now possess the instruments to close cultural divides and champion inclusiveness.

Game developers and publishers can sculpt localized, captivating experiences for global fans, breaking language boundaries and establishing deeper connections with players and listeners. This ensures peak interaction and effectiveness without compromising quality or precision.

Educational institutions are equipped to fashion audio content tailored to diverse users in their preferred languages, enhancing language understanding and even articulation capabilities, all while accommodating diverse pedagogical approaches and student necessities.

Accessibility institutes can further aid those with visual challenges or learning differences, offering tools to transform less user-friendly materials into formats that align with their preferences, both in content structure and delivery.

We're brimming with excitement to watch our existing and upcoming creators and innovators redefine the boundaries of the imaginable!